I talked to a contractor last month who spent $4,000 on an AI tool and another $1,200 on training videos. Six weeks later, only one person on his eight-person team was using it consistently. The others had watched the tutorials, nodded along, and then gone right back to the old way of doing things.

When I asked what happened, he said: “They just didn’t get it.”

That’s the polite version. The real story is that the training failed before it started—not because the team wasn’t capable, but because the training wasn’t built for how small teams actually work.

The Problem Most Small Businesses Face

Here’s what typically happens: A business owner buys an AI tool after seeing a demo or hearing a recommendation. The vendor provides access to a library of tutorial videos or a “getting started” guide. The owner sends the link to the team and says, “Watch these and start using it.”

Then nothing happens. Or worse, something does happen—people log in once, get confused, and quietly decide the old way is easier.

The tool sits unused. The investment becomes a sunk cost. And everyone assumes AI “just doesn’t work for us.”

But the problem isn’t the tool. It’s the training approach.

Why Generic Training Doesn’t Work for Small Teams

Most vendor-provided training is designed for generic use cases. It shows you how the tool works in theory, not how it fits your specific workflow. For a team running jobs, managing schedules, or handling client communication, that gap between “here’s what the button does” and “here’s how this helps me finish today’s work” is where adoption dies.

There’s also the issue of confidence. In larger companies, there’s usually an IT department or a dedicated person who handles new tools and trains the team. In small businesses, everyone is wearing multiple hats. If someone doesn’t feel confident using the tool after the first few tries, they won’t keep experimenting—they’ll default back to what they know works.

What Actually Works: Work-Tied Guidance

Training sticks when people see the tool reduce real work inside a real task they already own. The gap between “how the tool works” and “how I use this today” is where adoption dies.

Work-tied guidance has one job: Connect the tool to a specific task, with a clear before and after. If someone finishes one recurring task faster or with fewer mistakes in week one, confidence builds. If they do not see immediate value in the work they already have, usage fades.

A useful test is simple: After training, can a team member name the one task the tool supports, and describe what “better” looks like for that task? If they cannot, training stayed generic.

How to Know If Training Is Actually Working

The best indicator of successful training is simple: consistent usage. Not perfect usage—consistent usage. Are people logging in regularly? Are they using the tool for the tasks you identified during setup? Are they asking questions that show they’re pushing past the basics?

Here are a few other signs to watch for:

Team members start referencing the tool in daily conversations. Instead of saying “I’ll write that email,” they say “I’ll have the AI draft that and clean it up.” That shift in language means the tool is becoming part of their process, not an add-on they’re trying to remember.

People bring up specific use cases you didn’t train them on. This means they’re experimenting and thinking about where else the tool might help. That’s adoption moving from compliance to ownership.

The questions change. Early on, people ask “how do I…” questions. Later, they ask “can I…” questions. That’s the difference between learning mechanics and exploring possibilities.

How to Recognize Post-Launch Stalls

Even when training starts well, adoption stalls in predictable ways. The key is to spot the stall early, before the team decides the tool is not worth the effort.

Stall 1: the tool gets used on the wrong work. You see one-time experimentation, then silence. People describe outputs as “interesting” but not “useful.” The tool shows activity, but not saved time, reduced rework, or faster turnaround.

Stall 2: usage concentrates in one person. The team starts treating the tool like a service desk. Requests pile up. Everyone else stays dependent, and adoption never becomes normal behavior.

Stall 3: questions go quiet. In week one, people ask mechanics questions. By week six, they stop asking anything. That usually signals uncertainty and avoidance, not mastery.

How to Evaluate Whether Your Training Approach Will Stick

Use these criteria to judge whether your training matches how small teams work.

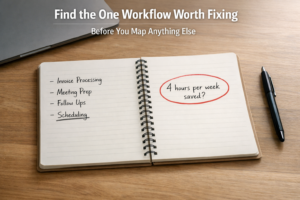

1. Single-task focus: Does training anchor on one recurring task with clear value, or does it try to cover the whole tool at once?

2. Real work connection: Does training use current work with real stakes, or generic examples that require translation back into daily operations?

3. Shared capability: Does more than one person feel able to run the task without help, or has usage become concentrated in one person?

4. Built-in follow-up: Is there a planned check-in after launch, or does training end the day the videos end?

5. Evidence over opinions: Do you track consistent usage on the agreed task, or rely on whether people say they like the tool?

Making Training Work for Your Team

AI adoption doesn’t fail because teams can’t learn. It fails because the training doesn’t match how small businesses actually operate. Generic tutorials, one-time sessions, and vendor walkthroughs aren’t designed for teams juggling multiple responsibilities with limited time.

What works is practical, work-tied guidance that starts small, builds confidence through real application, and continues past the first week. When training is tied to daily work and reinforced over time, adoption becomes natural—not forced.

If you’re thinking about adding AI to your team’s workflow, the question isn’t just “which tool should we use?” It’s “how will we make sure our team actually uses it?” That second question matters more than the first.